Optimizing the hyperparameters of your machine learning algorithm is a hard and tedious task.

Describe your experiment to Oscar in a few lines and he will take care of it for you.

# Get Oscar

from Oscar import Oscar

scientist = Oscar(YOUR_ACCESS_TOKEN)

# Describe your experiment

experiment = {name:"Square", parameters:{x : {min: -10, max : 10}}}

for i in range(1, 10):

# Get next parameters to try from Oscar

job = scientist.suggest(experiment)

# Run you complex, time-consuming algorithm

loss = math.pow(job.x, 2)

# Tell Oscar the result

scientist:update(job, {loss : loss})

-- Get Oscar

local Oscar = require('Oscar')

local scientist = Oscar(YOUR_ACCESS_TOKEN)

-- Describe your experiment

local experiment = {name="Square", parameters={x = {min = -10, max = 10}}}

for i = 1, 10 do

-- Get next parameters to try from Oscar

local job = scientist:suggest(experiment)

-- Run you complex, time-consuming algorithm

local loss = math.pow(job.x, 2)

-- Tell Oscar the result

scientist:update(job, {loss : loss})

end

Getting lost with your experiments ?

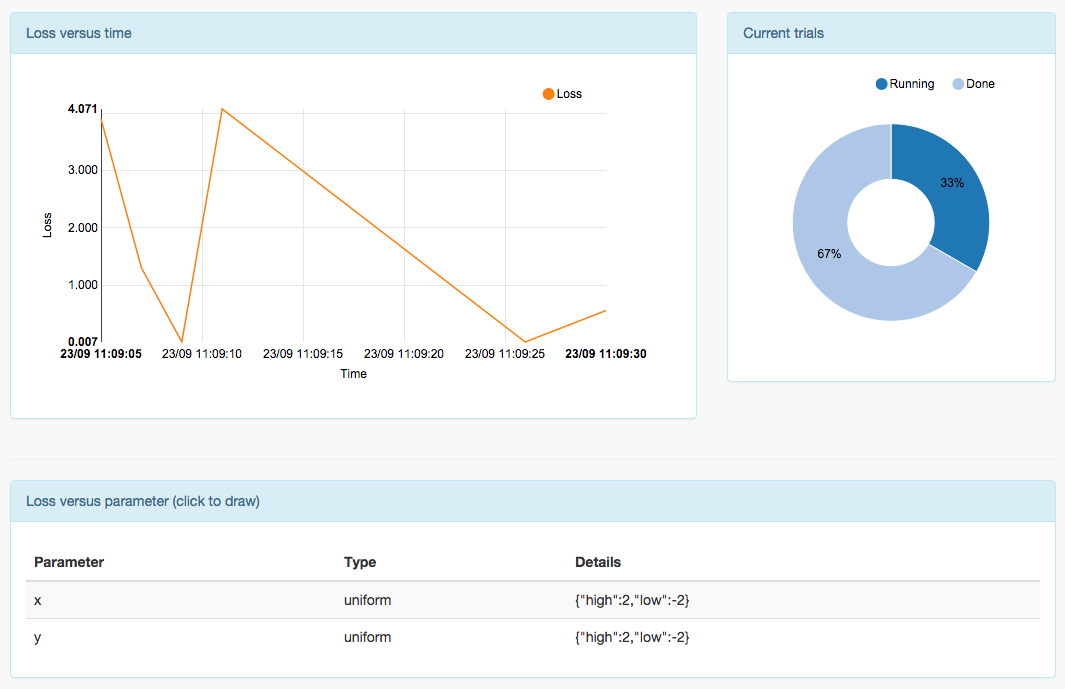

Oscar automatically chooses the best next hyperparameters to try depending on all the previous results.

At any time, he gives you clear insights on the influence of each hyperparameter of your algorithm.

You want to get fast results ?

Completly hosted on the cloud, Oscar will happily manage hundreds of experiments running on your cluster.